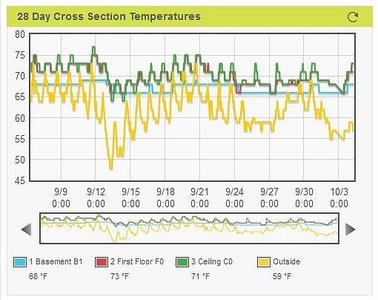

With so little sun, the temps in the house have fallen to the mid-60s so its time to turn on the HVAC system to take the edge off the chill and dampness.

As an aside, we only ran the AC one day this past summer. However, we ran the system in de-humidify mode for several days to take the humidity out of the basement.

By yesterday morning, the temperatures of the slabs throughout the house were uniformly at 66 degrees so I thought it would be fun (and informative) to run a little experiment.

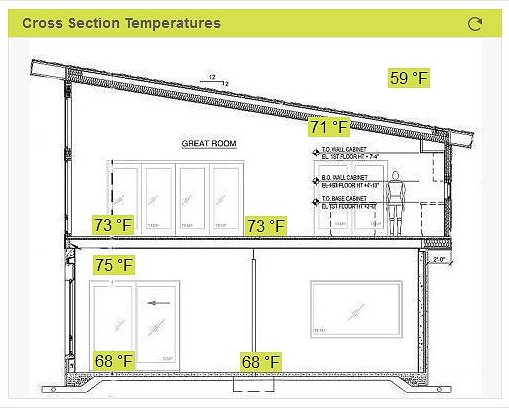

I turned on two mini-splits in the basement, with the theory that heating the basement would warm the main floor slab. Since the slab has an array of embedded temperature sensors this would allow me to calculate an estimate of heat gained versus the energy consumed.

| I started the test just after 9:00 using the splits under the kitchen and under BR2 and setting them to 75 degrees. The test ran for 24 hours so I could use Heating Degree Day (HDD) calculations. At the end of the test the temperature of the main floor slab was at 73 degrees for an increase of 7 degrees. I was surprised that the basement slab also increased, though only by 2 degrees. Air temperature in the basement was at 75 (as expected) and the main level was at 74. |

There are two components to the heat calculations: a) how much heat was lost through the envelope during the test period and b) how much heat was retained/stored in the thermal mass of the structure.

Based on our computer model, the heat load for the house is 15,816 BTU per HDD. The average interior-exterior temperature difference was 13 degrees so the test simulated 13 HDD. The estimated heat loss is 205,608 BTU.

The main floor slab consists of approximately 25 tons of concrete which will store about 10,866 BTU per degree of temperature difference. Since the temperature increased by 7 degrees, the slab retained about 76,000 BTU.

The basement slab only increased by 2 degrees but it is twice as thick so the basement slab retained about 42,000 BTU.

Heat Load (consumed) 206608

Heat stored in thermal mass 118201

Total heat 323809

Energy Used

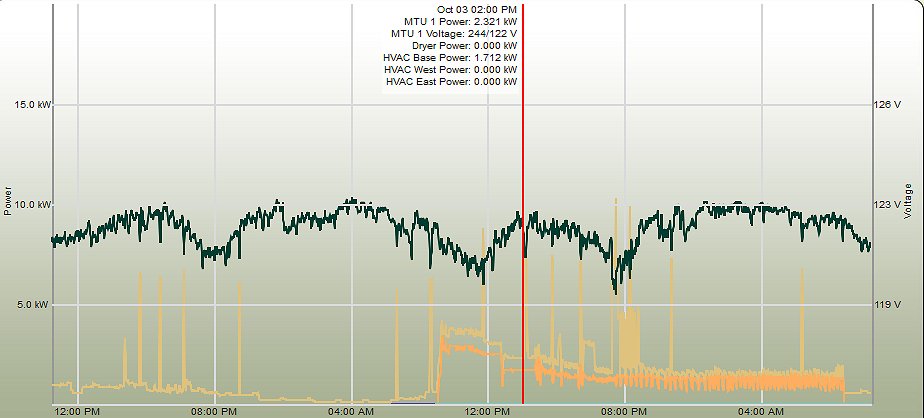

The TED energy monitor recorded a total of 35.122 kWh consumed by the mini-splits during the test. This equals about 9220 BTU per kWh consumed which indicated an efficiency of about 270%.

In the next experiment (already started) I have turned the mini-splits off again and we will measure how long it takes for the house to fall back to 66 degrees.

RSS Feed

RSS Feed